Neural Network For Classification

Predict Hand Written Digits

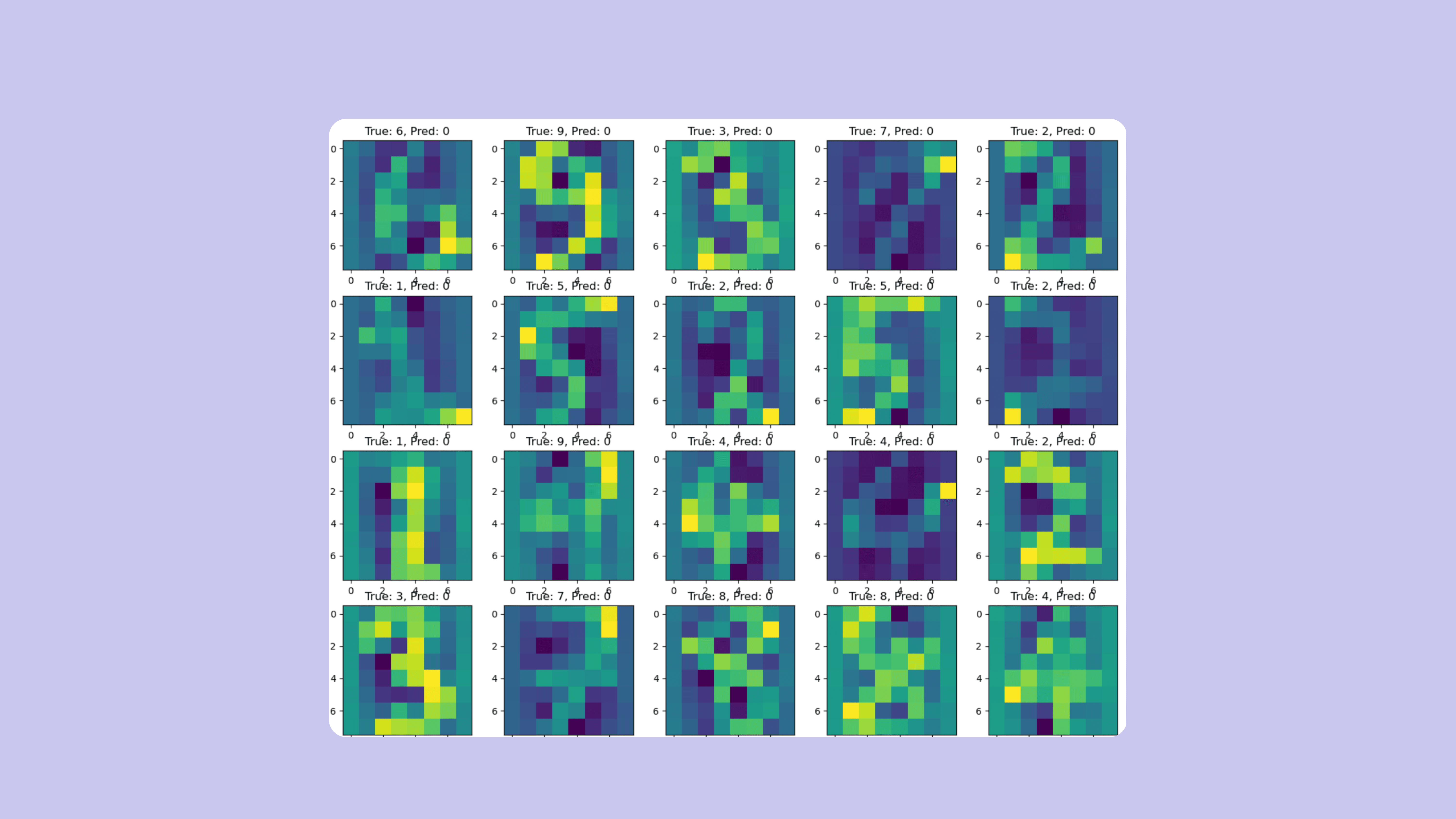

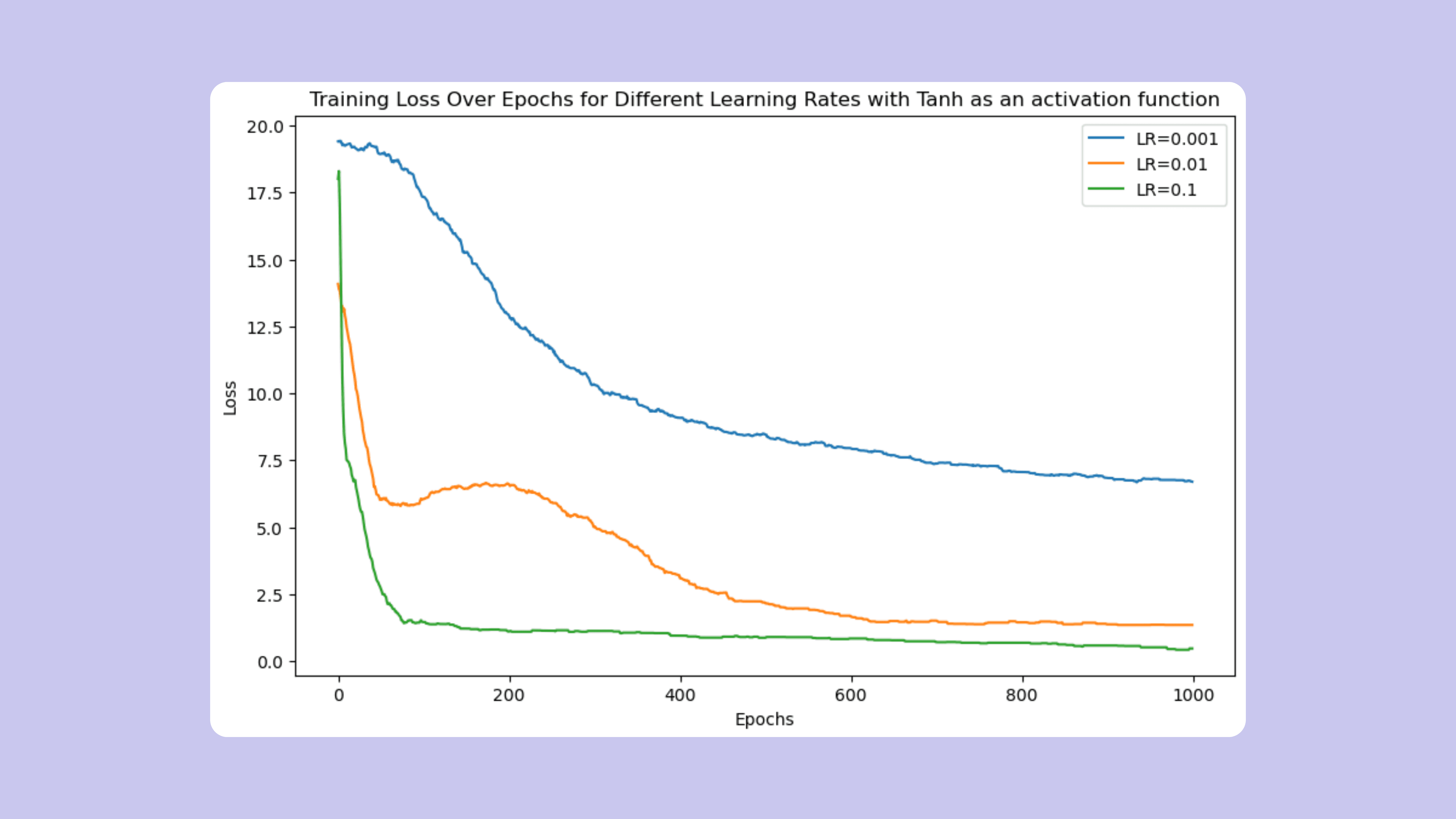

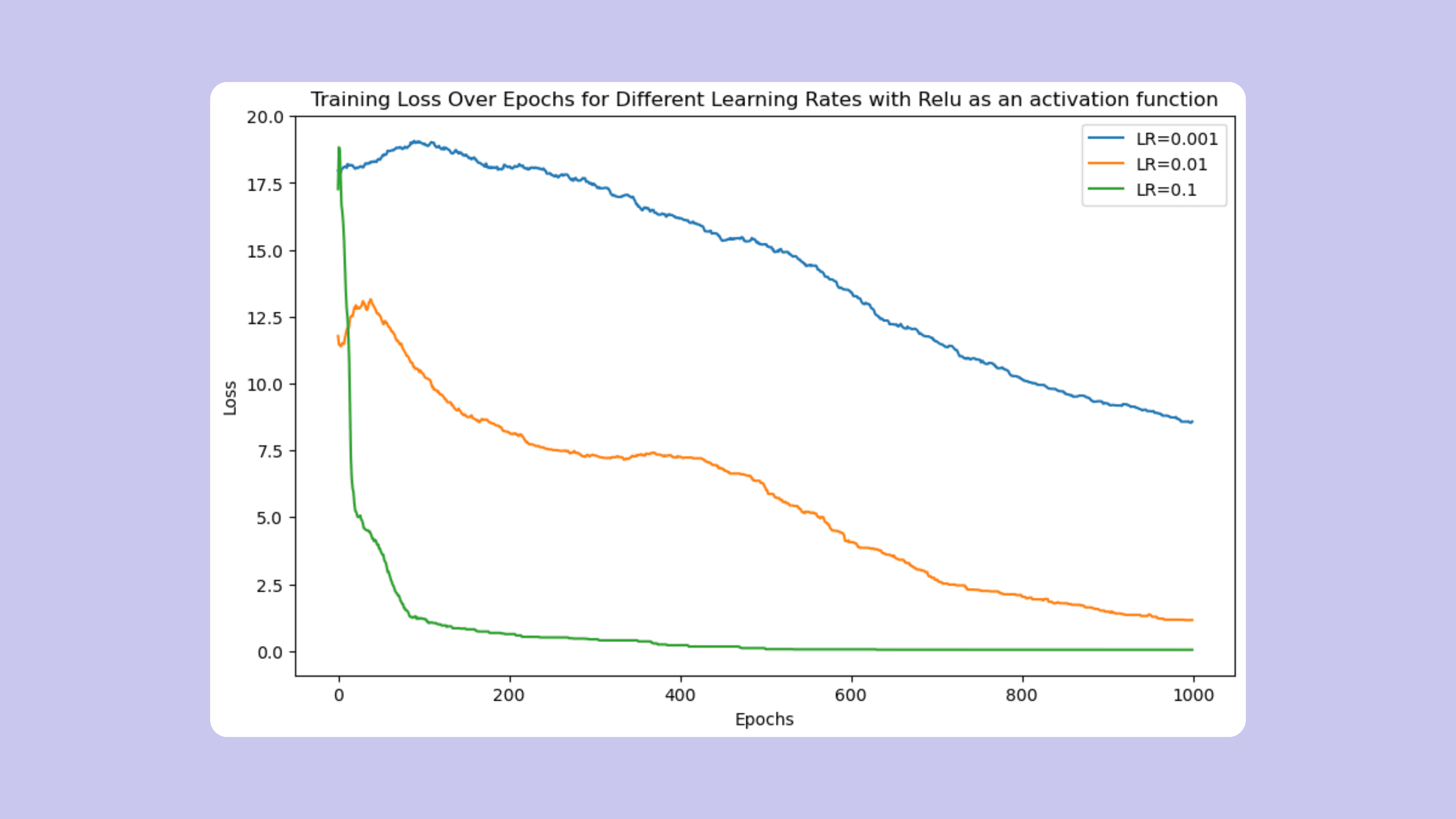

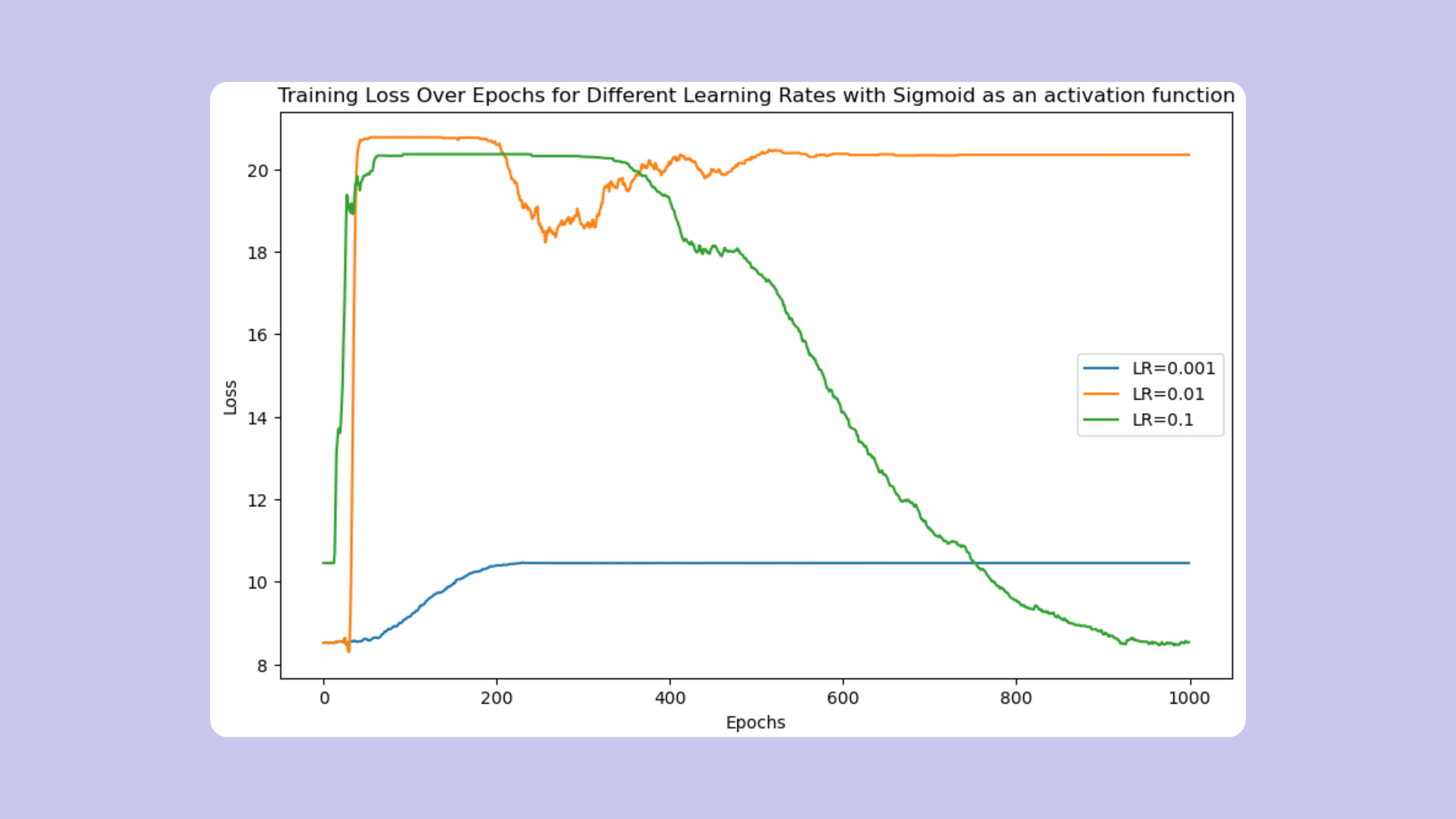

In this project, I built a neural network to classify handwritten digits. I processed input data through layers, applying linear transformations followed by activation functions like Sigmoid, TanH, and ReLU. The network consisted of an input, hidden, and output layer, with all layers fully connected. To minimize prediction error, I used Mean Squared Error (MSE) and adjusted the network’s weights through backpropagation and gradient descent. I also implemented activation functions and their derivatives in Python, refining the model's architecture by experimenting with different hidden layer configurations.

Client

Services

Python Machine Learning

Industries

Engineering

Date

March 2024

I built everything from scratch, starting with the derivations of partial derivatives for both forward and backward propagation. I created three models, each using a single activation function for all layers: the first with Sigmoid, the second with TanH, and the third with ReLU. I computed the cost function using Mean Squared Error (MSE) for each model, noticing distinct results due to the different ranges of the activation functions. The only hyperparameters I used were the learning rate and epochs. For prediction, I selected the largest of the 10 predicted values as the final output.